Chain-of-Thought (CoT) prompting is generally hypothesized to improve interpretability in LLMs compared to Direct Output generation, especially for complex, multi-step problem-solving tasks. CoT aims to enhance transparency, trust, and explainability by making the model’s reasoning process explicit through intermediate natural language steps. However, the actual improvement can depend on factors like task complexity, the quality of generated CoT, and user perception, with Direct Output potentially being preferred for simpler tasks or when brevity is paramount.

1. Defining Interpretability in the Context of LLMs

1.1. Key Aspects of Interpretability: Transparency, Trust, and Explainability

Interpretability in Large Language Models (LLMs) is a multifaceted concept crucial for their responsible deployment, encompassing transparency, trust, and explainability. Transparency refers to the extent to which a user can understand the internal mechanisms and decision-making processes of an LLM. However, the inherent complexity and “black box” nature of modern LLMs, often with billions of parameters, make achieving full transparency a significant challenge . Trust is built when users can rely on the model’s outputs and believe in its reasoning, which is heavily influenced by how well they can interpret and verify its decisions . Explainability, a key component of interpretability, focuses on providing human-understandable reasons for the model’s outputs, often through post-hoc analysis or by designing models that inherently produce explanations . The ability to explain why an LLM generated a particular response is vital for debugging, improving model performance, and ensuring fairness and accountability, especially in high-stakes applications . The increasing adoption of LLMs across various domains underscores the urgent need for robust interpretability methods that can address these aspects effectively, moving beyond traditional model introspection techniques which are often insufficient for such complex systems .

The challenge of interpretability is amplified by the scale and architecture of contemporary LLMs. Traditional machine learning interpretability methods, such as feature importance analysis or decision tree visualization, are not directly applicable or sufficient for LLMs due to their massive size and the intricate, non-linear interactions within their neural network structures . This opacity poses significant issues for transparency, as it’s difficult to discern how inputs are transformed into outputs. Consequently, building trust becomes problematic; if users cannot understand the reasoning behind an LLM’s decision, they are less likely to trust its outputs, particularly in critical scenarios. Explainability aims to bridge this gap by providing insights into the model’s behavior, often through techniques that highlight influential input features, reveal internal representations, or generate natural language justifications for the model’s predictions . The development of effective explainability techniques is therefore paramount for fostering user confidence, ensuring ethical AI practices, and facilitating the broader and safer integration of LLMs into society . The research community is actively exploring various avenues, from analyzing attention mechanisms to developing novel evaluation methodologies, to enhance the interpretability of these powerful models .

1.2. The Role of Natural Language Explanations (Rationales and Chain-of-Thought)

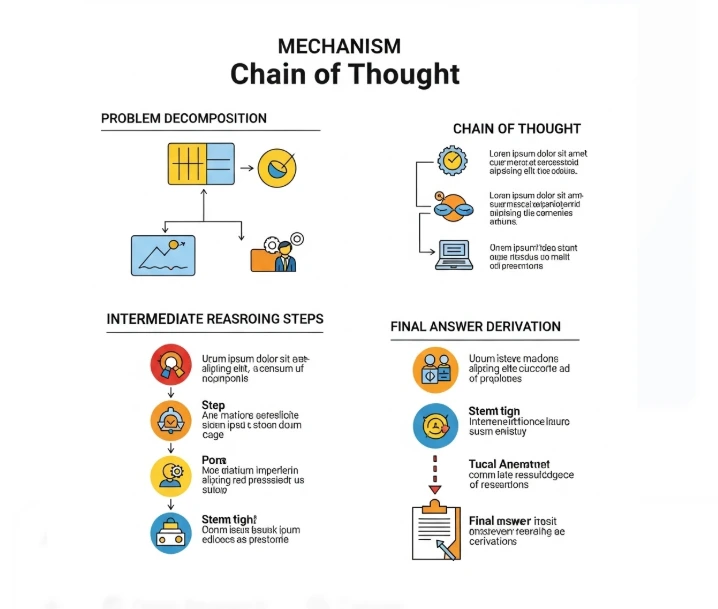

Natural language explanations (NLEs), including rationales and Chain-of-Thought (CoT) prompting, play a pivotal role in enhancing the interpretability of Large Language Models (LLMs) by providing human-readable justifications for their outputs . Rationales are textual explanations that accompany a model’s prediction, detailing the reasoning steps or key pieces of evidence that led to a particular conclusion. CoT prompting, a specific technique for eliciting NLEs, instructs the LLM to generate intermediate reasoning steps before arriving at a final answer, effectively “showing its work” . This approach is particularly beneficial for complex, multi-step problems where the final output alone might not be sufficient to understand the model’s logic. By articulating its reasoning process in natural language, an LLM can make its decision-making more transparent and accessible to human users, thereby fostering trust and facilitating easier validation of its outputs . The generation of these explicit rationales is crucial for building trust and allowing users to verify the validity of the model’s output, especially in high-stakes domains. The clarity offered by CoT can also aid in debugging the model’s reasoning when errors occur, providing insights into where the logic deviated.

The effectiveness of NLEs, including CoT, stems from their ability to bridge the gap between the complex internal computations of an LLM and human understanding. Instead of relying solely on abstract representations or feature attributions, NLEs offer a narrative of the model’s “thought process” . This can be particularly useful for identifying errors, biases, or flawed reasoning within the model. For instance, if a CoT explanation reveals an incorrect intermediate step, it provides a clear point for debugging or refining the model. Furthermore, NLEs can enhance user engagement and collaboration, allowing users to interact with the model’s reasoning and potentially correct or guide it. The development of methods to generate faithful, accurate, and comprehensive NLEs is an active area of research, with ongoing efforts to ensure that these explanations truly reflect the model’s internal state and reasoning, rather than being plausible-sounding but misleading justifications . The integration of NLEs into LLM interaction paradigms is seen as a crucial step towards building more trustworthy and reliable AI systems . Further developments in CoT include “Guided CoT Templates” and “ReAct” (Reasoning and Acting). Guided CoT templates provide a structured, predefined framework of logical steps to steer the model’s reasoning, ensuring a more systematic and interpretable process . ReAct, on the other hand, integrates task-specific actions with step-by-step reasoning, allowing models to perform iterative reasoning and action cycles . The distinction between “Standard Prompting” (direct response), CoT prompting (reasoning and response together), and “Explicit CoT” (decomposed reasoning and response generation) is also crucial, with Explicit CoT argued to lead to clearer, more systematic reasoning and better transparency .

2. Experimental Design: Comparing CoT and Direct Output

2.1. Task Selection: Complex, Multi-Step Problem Solving

The selection of appropriate tasks is crucial for investigating the comparative interpretability of Chain-of-Thought (CoT) prompting versus Direct Output generation in Large Language Models (LLMs). The core objective is to evaluate these approaches in scenarios that demand complex, multi-step problem-solving, as these are the contexts where the benefits of CoT, particularly its step-by-step reasoning process, are hypothesized to be most pronounced. Tasks must be sufficiently challenging to require a non-trivial reasoning process, allowing for a clear distinction between a simple, direct answer and a more elaborated, stepwise solution. The problems should ideally be drawn from domains familiar to the participant group (students) to ensure they can engage with the content and evaluate the interpretability of the LLM’s output effectively. The complexity should arise from the need to perform multiple logical or arithmetic operations, integrate information from different parts of a problem statement, or apply a series of concepts to arrive at a solution. Initial explorations considered math word problems and logic puzzles , with a focus on those requiring several steps of deduction or inference.

The “Carnival Booth Arithmetic” problem, involving calculating total costs from multiple items and quantities, serves as a good example of a task with a clear, multi-step solution path that can be explicitly articulated by a CoT-prompted LLM . Similarly, Complex Problem Solving (CPS) tasks, as described in the literature, inherently require individuals to explore, understand, and control problem environments that are often unknown, non-transparent, and consist of numerous interconnected elements . The MicroDYN approach, a specific type of CPS task, involves problems with increasing complexity in terms of the number of input and output variables and their interconnections, often structured in a knowledge acquisition phase and a knowledge application phase . Such tasks, like the “Game Night” example involving understanding how different inputs affect various outputs, are well-suited for evaluating LLM interpretability as they push the boundaries of model reasoning and explanation capabilities . Scientific inquiry tasks, such as those from PISA 2015 involving simulations to determine the effects of multiple variables (e.g., “Running in Hot Weather” task), also offer a rich source of complex, multi-step problems requiring logical reasoning to connect data to conclusions . The final set of tasks will be curated to represent a range of difficulties and types of reasoning, ensuring they are complex enough to highlight differences in interpretability but solvable by both students and LLMs.

2.2. Participant Group: Students

The participant group for this study will consist of students. This choice is motivated by several factors relevant to the research question. Students represent a key demographic that increasingly interacts with AI-powered tools for learning and problem-solving. Their familiarity with educational contexts and structured problem-solving makes them well-suited to evaluate the clarity and helpfulness of LLM-generated explanations. Furthermore, students are often engaged in tasks that require multi-step reasoning, aligning with the types of problems selected for the experiment. Their cognitive processes and learning strategies are also of interest when assessing how different AI explanation styles impact understanding and trust.

The age group and educational level of the students will be selected to match the complexity of the chosen tasks, ensuring they have the necessary foundational knowledge to engage with the problems meaningfully. For instance, if tasks involve middle school mathematics or logic, students from corresponding grade levels would be appropriate participants. The study aims to gather insights into how this specific user group perceives and benefits from CoT versus Direct Output, providing valuable feedback for the design of educational AI tools and for understanding the broader implications of LLM interpretability for younger users. Ethical considerations, including informed consent and data privacy, will be paramount when working with student participants.

2.3. Prompting Strategies:

The experiment will compare two primary prompting strategies for LLMs: Chain-of-Thought (CoT) prompting and Direct Output generation. The goal is to assess their relative impact on the interpretability of the model’s responses to complex, multi-step problems. For each selected problem, both types of prompts will be designed to elicit the same correct final answer from the LLM, ensuring that any differences in perceived interpretability are due to the presence or absence of the reasoning chain, not answer accuracy.

The following table summarizes the key characteristics of the two prompting strategies:

| Feature | Chain-of-Thought (CoT) Prompting | Direct Output Generation |

|---|---|---|

| Objective | Elicit explicit, step-by-step reasoning before the final answer | Obtain only the final answer without intermediate steps |

| Mechanism | Instructs LLM to “think step by step,” show calculations, or use templates/few-shot examples to guide reasoning | Presents the problem and asks for the solution directly |

| Output Structure | Intermediate reasoning steps articulated in natural language, followed by the final answer | Only the final answer is provided |

| Expected Benefit | Enhanced transparency, trust, and explainability; easier error identification | Brevity and speed; potentially preferred for simple tasks |

| Example Prompt Phrasing (Carnival Booth Problem) | “You are a helpful assistant. Solve the problem step by step, showing all your calculations. Finally, provide the answer.” | “You are a helpful assistant. Solve the problem and provide the final answer.” |

| Primary Use Case | Complex tasks requiring multi-step reasoning, educational settings, debugging | Tasks where only the final result is needed, or speed is critical |

Table 1: Comparison of Chain-of-Thought (CoT) Prompting and Direct Output Generation Strategies

2.3.1. Chain-of-Thought (CoT) Prompting

Chain-of-Thought (CoT) prompting is a technique designed to elicit a step-by-step reasoning process from a Large Language Model (LLM) before it arrives at a final answer . This method aims to make the model’s “thinking” more transparent and interpretable, particularly for complex tasks that require multiple logical or computational steps. Instead of a direct, immediate output, the LLM is guided to articulate the intermediate stages of its reasoning, mimicking a human-like problem-solving approach . For the proposed experiment, CoT prompting will be implemented by explicitly instructing the LLM within the prompt to detail its reasoning. This can be achieved through various phrasings, such as “Please provide step-by-step reasoning before your final answer,” “Think through the problem step by step, explaining your logic, and then give the answer,” or “Let’s think step by step” . For the “Carnival Booth Arithmetic” problem, the specific prompt designed is: “You are a helpful assistant. Solve the problem step by step, showing all your calculations. Finally, provide the answer.” This prompt directly instructs the LLM to decompose the problem, perform and display all intermediate calculations, and then state the final numerical answer. The structure of CoT prompts can be further refined using templates or few-shot examples to ensure consistency and clarity . The benefits of CoT prompting extend beyond potentially improved accuracy; enhanced interpretability is a key advantage, allowing users to follow the model’s logic and identify potential errors .

2.3.2. Direct Output Generation

Direct Output generation, in contrast to Chain-of-Thought (CoT) prompting, involves LLMs providing a final answer to a problem without explicitly detailing the intermediate reasoning steps. This is the more traditional mode of interaction with AI models, where the user receives an outcome or a conclusion directly. For the purpose of this experiment, Direct Output will serve as the baseline or control condition against which the interpretability of CoT prompting is compared. The prompts for Direct Output will be designed to be neutral and straightforward, simply presenting the problem and asking for the solution without any explicit instruction to show working or explain the reasoning. For example, for the “Carnival Booth Arithmetic” problem, the Direct Output prompt is: “You are a helpful assistant. Solve the problem and provide the final answer.” . The critical aspect of implementing the Direct Output condition is to ensure that the LLM generates the correct final answer for the selected complex problems. This is paramount because the study aims to isolate the effect of the presence or absence of the reasoning chain on interpretability, not the effect of answer correctness. The rationale for including a Direct Output condition is to assess whether the additional cognitive load or time required to process a CoT explanation is justified by a significant improvement in perceived interpretability. While CoT is hypothesized to be more interpretable, Direct Output has the advantage of brevity and speed.

3. Metrics for Evaluating Interpretability

The evaluation of interpretability in LLMs will employ both objective and subjective metrics to provide a comprehensive understanding of the impact of CoT versus Direct Output. This multi-faceted approach allows for the assessment of not only the semantic quality of the explanations but also the user’s perception and experience.

The following table summarizes the key metrics for evaluating interpretability:

| Metric Category | Metric Name | Description | How it Measures Interpretability | Data Collection Method |

|---|---|---|---|---|

| Objective | Answer Semantic Similarity (ASS) | Measures semantic alignment between LLM output and ground-truth explanation using embeddings (e.g., cosine similarity) | Higher similarity indicates better alignment with expected/correct reasoning or factual explanation | Automated comparison of LLM output with reference |

| LLM-based Accuracy (Acc) | Uses an evaluator LLM to judge if the generated answer correctly reflects facts in the ground-truth | Assesses factual correctness of the explanation against a known standard | Prompting an evaluator LLM | |

| LLM-based Completeness (Cm) | Uses an evaluator LLM to judge if the generated answer covers all key points in the ground-truth | Assesses thoroughness of the explanation against a known standard | Prompting an evaluator LLM | |

| LLM Answer Validation (LAV) | Uses an evaluator LLM for binary validation of response correctness against ground-truth | Provides a nuanced assessment of correctness, aligning with human judgment | Prompting an evaluator LLM | |

| Subjective | Student Perceptions (Likert-Scale Surveys) | Quantifies student ratings on clarity, trustworthiness, completeness, understandability, and helpfulness of explanations | Captures user experience and judgment, reflecting how well explanations aid understanding and build trust | Post-survey questionnaires administered to students |

Table 2: Metrics for Evaluating Interpretability in LLMs

3.1. Objective Metric: Answer Semantic Similarity (ASS)

Answer Semantic Similarity (ASS) is an objective metric used to evaluate the interpretability of Large Language Models (LLMs) by measuring how closely the meaning of a model-generated response aligns with a ground-truth answer or explanation . This metric typically involves generating vector representations (embeddings) for both the LLM’s output and the reference text using a pre-trained language model encoder. The similarity between these embeddings is then calculated, often using cosine similarity, to produce a score that quantifies their semantic alignment . A higher ASS score indicates that the LLM’s response is semantically closer to the expected or correct explanation, suggesting better interpretability in terms of conveying the intended meaning. This approach is particularly useful when assessing whether an LLM can faithfully reproduce or align with a known reasoning process or factual explanation, especially in context-specific scenarios . The use of ASS allows for an automated and scalable way to gauge the quality of explanations provided by LLMs, complementing other evaluation methods.

3.1.1. Measuring Alignment with Ground-Truth Explanations

Measuring alignment with ground-truth explanations is a critical aspect of evaluating the interpretability of Large Language Models (LLMs), and Answer Semantic Similarity (ASS) serves as a key metric in this regard . Ground-truth explanations are pre-defined, correct, and often human-annotated justifications or reasoning processes for a given input. The goal is to assess how well an LLM’s generated explanation matches this established standard of correctness and completeness. ASS achieves this by quantifying the semantic resemblance between the LLM’s output and the ground-truth explanation. A high ASS score suggests that the LLM not only arrived at the correct final answer but also articulated its reasoning in a way that is semantically consistent with the expected logical steps or factual basis . This is particularly important in scenarios where the reasoning process itself is as valuable as the final output, such as in educational settings or complex decision-support systems. The CDK-E methodology, for example, utilizes ASS to evaluate the alignment of LLM responses with ground-truth data within divergent contexts, ensuring that the model’s explanations are not only plausible but also factually accurate and contextually relevant .

3.1.2. Utilizing Large Language Model-Based Metrics for Accuracy and Completeness

Beyond semantic similarity, Large Language Model (LLM)-based metrics are increasingly used to evaluate the accuracy and completeness of generated explanations, offering a more nuanced assessment of interpretability . These metrics leverage the capabilities of other (often more powerful or specialized) LLMs, acting as “evaluator LLMs,” to judge the quality of the outputs from the “evaluated LLM.” For instance, the Context-Driven Divergent Knowledge Evaluation (CDK-E) methodology proposes metrics such as LLM-based Accuracy (Acc) and LLM-based Completeness (Cm) . The Acc metric assesses whether the LLM-generated answer correctly reflects the factual information presented in the ground-truth, typically by prompting the evaluator LLM with a question like, “Does the LLM-generated answer correctly reflect the facts presented in the ground-truth?” . Similarly, the Cm metric evaluates if the generated answer includes all key points mentioned in the ground-truth, using a prompt such as, “Does the LLM-generated answer cover all key points mentioned in the ground-truth?” . Another crucial LLM-based metric is LLM Answer Validation (LAV), which uses an LLM to perform a binary validation of whether the generated response is correct when compared to the ground-truth . LAV aims to ensure consistency with human judgments by leveraging the advanced validation capabilities of language models, providing a straightforward “valid” or “invalid” assessment. The CDK-E methodology considers LAV as its main metric because it most closely aligns with human evaluative processes .

3.2. Subjective Metric: Student Perceptions via Likert-Scale Surveys

The evaluation of interpretability in Large Language Models (LLMs) extends beyond objective metrics and necessitates an understanding of the user’s subjective experience. For this study, which aims to compare Chain-of-Thought (CoT) prompting with Direct Output generation, student perceptions will be gathered using Likert-scale surveys. This approach allows for the quantification of subjective qualities such as clarity, trustworthiness, and completeness of the AI-generated explanations. The decision to use Likert scales is supported by existing research in the field, such as the work by Huang et al. (2024), who employed a 7-point Likert scale to assess the quality of natural language explanations (NLEs) generated by AI models . Their study focused on properties including informativeness and clarity, providing a precedent for using such scales to measure user-perceived explanation quality. The current study plans to adapt and expand upon these established constructs to create a comprehensive survey instrument tailored to the comparison of CoT and Direct Output. The development of the Likert-scale survey will involve defining specific items that target key dimensions of interpretability, including understandability, trustworthiness, completeness, transparency, clarity, and informativeness .

3.2.1. Assessing Clarity, Trustworthiness, and Completeness of Explanations

The subjective assessment of interpretability will heavily rely on student ratings of clarity, trustworthiness, and completeness. These three constructs are considered fundamental to a user’s ability to understand and rely on an AI’s reasoning process. The survey instrument will feature specific Likert-scale items for each construct, allowing for a quantitative comparison between Chain-of-Thought (CoT) and Direct Output.

Clarity refers to the ease with which the explanation can be understood, encompassing aspects such as simplicity of language, logical flow, and absence of ambiguity . For CoT explanations, clarity would involve evaluating whether each step is clearly articulated. For Direct Output, clarity might be assessed based on conciseness, though it inherently lacks a step-by-step breakdown.

Trustworthiness measures the user’s confidence in the AI’s explanation. This is critical, as an explanation is of little value if not trusted. Trust can be influenced by perceived logical soundness, consistency with known facts, and perceived reliability . For CoT, trust might be built by observing a logical progression of steps.

Completeness assesses whether the explanation covers all necessary steps and information. A complete explanation should leave the user with no sense of omitted critical reasoning parts. For CoT, completeness involves evaluating if the chain of thought includes all relevant intermediate conclusions and links. For Direct Output, completeness regarding the reasoning process is less applicable as it’s not shown, but the survey will focus on the perceived thoroughness of the explanation for the reasoning process .

The survey will present students with AI-generated solutions (both CoT and Direct Output) to complex problems. After interacting with each type of output, students will rate their agreement with statements related to these dimensions using a Likert scale (e.g., 5-point or 7-point). The specific phrasing will be carefully crafted to be unambiguous and relevant to the student’s experience, for example, “The AI’s explanation of its reasoning was easy to follow” (clarity/understandability) or “I trust the AI’s reasoning process as presented in the explanation” (trustworthiness).

4. Statistical Analysis of Interpretability Scores

The statistical analysis of interpretability scores will be crucial for drawing meaningful conclusions from the experimental data. The primary goal is to determine if there are statistically significant differences in interpretability between Chain-of-Thought (CoT) prompting and Direct Output generation, as perceived by student participants and measured by objective metrics. The data collected will primarily consist of Likert-scale ratings for various interpretability dimensions (e.g., clarity, trustworthiness, completeness) and potentially objective metrics like answer semantic similarity if ground-truth explanations are available and utilized.

4.1. Comparison of Semantic Similarity Scores (CoT vs. Direct Output)

The comparison of semantic similarity scores (e.g., ASS) between CoT and Direct Output conditions will involve statistical tests to determine if the explicit reasoning provided by CoT leads to outputs that are significantly more aligned with ground-truth explanations. If the data meets parametric assumptions (normality and homogeneity of variances), an independent samples t-test could be used for a between-subjects design, or a paired t-test for a within-subjects design where the same problems are evaluated under both conditions. However, if these assumptions are violated, or if the similarity scores are derived from ordinal data or are not normally distributed, non-parametric tests will be more appropriate.

4.1.1. Applicability of Non-Parametric Tests (Mann-Whitney U, Wilcoxon Signed-Rank)

Non-parametric tests are robust statistical methods that do not assume a specific distribution for the data (e.g., normal distribution) and are often used with ordinal data or interval data that violates parametric assumptions.

If a within-subjects design is employed, where each student evaluates both CoT and Direct Output for d

ifferent problems (or the same problems in a counterbalanced order), the Wilcoxon signed-rank test is a common non-parametric alternative to the paired t-test. This test assesses whether the median difference between paired observations (e.g., CoT interpretability score minus Direct Output interpretability score for each student) is significantly different from zero.

Alternatively, if a between-subjects design is chosen, where one group of students evaluates CoT outputs and another group evaluates Direct Output outputs, the Mann-Whitney U test (also known as the Wilcoxon rank-sum test) would be the appropriate non-parametric alternative if parametric assumptions are violated. This test compares the distributions of scores between two independent groups to determine if one group tends to have higher scores than the other.

The choice between parametric and non-parametric tests will be guided by diagnostic checks of the data, such as Shapiro-Wilk tests for normality and Levene’s test for homogeneity of variances.

4.1.2. Rationale for Choosing Non-Parametric Tests for Interval Data (e.g., Cosine Similarity)

Even if the data is interval, such as cosine similarity scores derived from ASS, non-parametric tests might be preferred if the data is not normally distributed. While cosine similarity produces an interval value between -1 and 1, the distribution of these scores across different problems or model outputs may not always be normal, especially with smaller sample sizes or when dealing with complex, skewed data. Non-parametric tests offer a more conservative and distribution-free approach, making them suitable for such scenarios. The central limit theorem might justify the use of t-tests for means if sample sizes are large, but non-parametric tests provide a safer alternative for smaller samples or clearly non-normal data. This ensures the validity of the statistical conclusions drawn regarding the differences in semantic similarity between CoT and Direct Output explanations. The analysis will involve calculating descriptive statistics (means, medians, standard deviations, interquartile ranges) and then applying the chosen inferential statistical tests, along with effect size calculations (e.g., Cohen’s d for t-tests, or r for Wilcoxon tests) to quantify the magnitude of any observed differences.

5. Expected Outcomes and Implications

5.1. Hypothesized Advantages of CoT for Interpretability

It is hypothesized that Chain-of-Thought (CoT) prompting will significantly improve the interpretability of LLMs compared to Direct Output generation, particularly for complex, multi-step problem-solving tasks. The explicit articulation of intermediate reasoning steps in CoT is expected to lead to higher perceived clarity, as users can follow the logical progression. This transparency is anticipated to foster greater trustworthiness, as users can inspect the reasoning and verify its soundness, rather than relying solely on a “black box” output . Furthermore, CoT explanations are expected to be rated as more complete in terms of detailing the problem-solving process, providing a more comprehensive understanding of how the final answer was derived. Objectively, CoT outputs might also show higher Answer Semantic Similarity (ASS) to ground-truth explanations, indicating a more faithful representation of the intended reasoning. The step-by-step nature of CoT is also expected to make the AI’s reasoning process more understandable and helpful for students, potentially aiding their own learning and problem-solving skills.

5.2. Potential Benefits of Direct Output in Certain Contexts

While CoT is hypothesized to enhance interpretability for complex tasks, Direct Output generation may offer advantages in specific contexts. The primary benefit of Direct Output is its brevity and speed. For users who are highly confident in the LLM’s capabilities for a particular task, or for problems where the solution is straightforward and the reasoning is self-evident, a direct answer might be preferred to avoid the cognitive load of processing a lengthy CoT explanation. In time-sensitive situations, the quick delivery of a final answer without intermediate steps could be more efficient. Furthermore, if the primary goal is simply to obtain a factual piece of information and the “why” is less critical, Direct Output suffices. It’s also possible that for very simple tasks, a CoT explanation might be perceived as overly verbose or even patronizing, whereas a Direct Output would be more concise and to the point. Therefore, the preference for CoT versus Direct Output might be task-dependent and user-dependent, highlighting a trade-off between depth of understanding and efficiency.

5.3. Implications for LLM Design and User Interaction

The findings from this research will have significant implications for the design of LLMs and user interaction paradigms. If CoT is consistently shown to improve interpretability, it could lead to its wider adoption as a standard prompting technique or even an inherent feature in LLMs designed for tasks requiring transparency and explainability. This would particularly impact educational AI tools, where showing the “working out” can be crucial for student learning. Developers might invest more in refining CoT generation, ensuring its faithfulness and clarity. For user interaction, interfaces could be designed to dynamically offer CoT explanations upon user request or based on task complexity. The research could also inform the development of personalized AI assistants that adapt their explanation style (CoT vs. Direct) based on user preferences, expertise, or the nature of the query. Furthermore, understanding the specific aspects of CoT that contribute most to interpretability (e.g., logical coherence, step granularity, naturalness of language) can guide the creation of better evaluation metrics and training objectives for LLMs, ultimately leading to more trustworthy and user-friendly AI systems. The study may also highlight the need for user training or guidance on how to effectively interpret and utilize CoT explanations.

Follow JSTOR ONLINE or search on Google Scholar for more updates!